AI basics: How TPU is different from CPU and GPU

The field of AI is notoriously filled with jargon. To better understand what is going on, here is a series of explainers, which will break down some of the most common terms used in AI, and why they are important

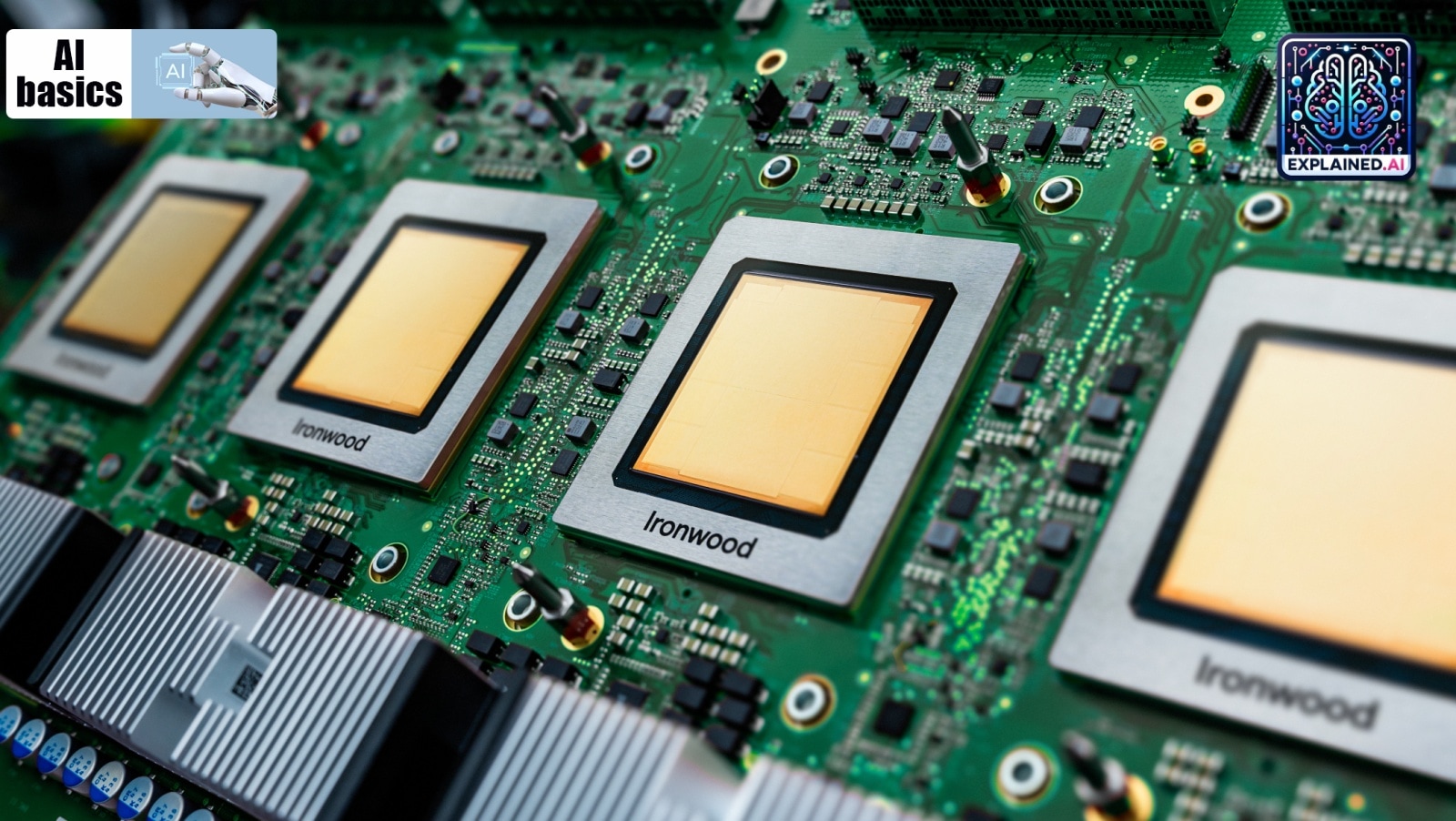

Google first introduced TPUs in 2015. (Photo: Google)

Google first introduced TPUs in 2015. (Photo: Google)

Google last week launched a new computer chip, called Ironwood. It is the company’s seventh-generation TPU, or tensor processing unit, which has been designed to run artificial intelligence (AI) models.

Here is a look at what TPU is and how it is different from other processors such as the central processing unit (CPU), and graphics processing unit (GPU).

But first, what are processing units?

Processing units are essentially hardware units that are the brain of a computer. Like the human brain handles tasks such as reading and solving a math problem, processing units also perform tasks. These could be doing calculations, taking a picture, or sending a text.

What is a CPU?

Developed in the 1950s, a CPU is a general-purpose processor that can handle various tasks. It is like a conductor in an orchestra, controlling all the other components of the computer — from GPUs to disk drives and screens — to perform different tasks.

A CPU has at least a single core — the processing unit within the CPU that can execute instructions. In the initial years, CPUs used to have just one core but today, they can contain from two to up to 16 cores. As each core of the CPU can handle one task at a time, the ability to multitask is determined by the number of cores in the hardware.

“Generally, two to eight cores per CPU is enough for whatever tasks a layman may need, and performance of these CPUs are quite efficient to the point that humans can’t even notice that our tasks are being executed in a sequence instead of all at once,” according to a report in DigitalOcean.

What is a GPU?

Unlike a CPU, a GPU is a specialised processor (it is a type of application-specific integrated circuit, or ASIC) which has been designed to perform multiple tasks concurrently rather than sequentially (like in a CPU). Modern GPUs comprise thousands of cores which break down complex problems into thousands or millions of separate tasks and work them out in parallel, a concept known as parallel processing. This makes GPUs far more efficient than CPUs.

Initially developed for graphics rendering in gaming and animation, GPUs today are far more flexible and have become the bedrock of machine learning (click here to know more about machine learning).

“While graphics and hyper-realistic gaming visuals remain their principal function, GPUs have become more general-purpose parallel processors, able to execute many tasks simultaneously to handle a growing range of applications, including AI,” according to a report on Intel’s website.

This does not mean that GPUs have replaced CPUs. That is because, in certain situations, it is more efficient to perform a task sequentially rather than opting for parallel processing. Therefore, today, GPUs are used as a co-processor to increase performance.

To better understand this, consider this analogy. The CPU is like Carmy from the television series The Bear who is the head chef of his restaurant and has to make sure that hundreds of hot dog patties get flipped. Now, he can do it himself but this would be a time-consuming task. So, Carmy takes help from Sydeney who is the sous-chef and can flip the patties in parallel. The GPU is like Sydeney with 10 hands who can flip 10 patties in 10 seconds.

What is a TPU?

A TPU is also a type of ASIC, meaning it is designed to perform a narrow scope of intended tasks. First used by Google in 2015, TPUs were specially built to accelerate machine learning workloads.

“We designed TPUs from the ground up to run AI-based compute tasks, making them even more specialised than CPUs and GPUs. TPUs have been at the heart of some of Google’s most popular AI services, including Search, YouTube and DeepMind’s large language models,” Chelsie Czop, a product manager who works on AI infrastructure at Google Cloud, said in an interview.

TPUs are engineered to handle tensor — a generic name for the data structures used for machine learning — operations. They excel in processing large volumes of data and executing complex neural networks (click here to read more on neural networks) efficiently, enabling fast training of AI models. While AI models can take weeks to be trained with the help of GPUs, the same process can be executed within hours using TPUs.

- 01

- 02

- 03

- 04

- 05