However, several users who have access to GPT-4o have taken the Internet by storm with their demos of the new model’s capabilities. I tried the GPT-4o capabilities in some real-life scenarios, and here is a list of some use cases that I found particularly impressive.

Story continues below this ad

1. Interpret human faces

This is one unique feature I found very interesting and amusing. I uploaded a random picture of mine on ChatGPT with a simple prompt – “Interpret face at human level”. Within a few seconds, the chatbot analysed the image and shared its interpretation. ChatGPT powered by GPT-4o made a rather fair assessment of the image I shared. It gave an accurate description of the setting, the arrangement of the room, the type of bedspread, etc. It even commented on the expression on my face and quite accurately read the objects in the background.

(Screengrab: Bijin Jose/The Indian Express)

(Screengrab: Bijin Jose/The Indian Express)

“In the photo, you appear to be indoors, sitting on a bed with a patterned bedspread. You’re wearing an orange shirt and have a neutral expression. Your hair is styled and you have some facial hair. The background includes a pillow and some books,” read the response.

2. Transcribing handwritten notes from a time long gone

To try this, I downloaded a random image of a letter written by someone in the early 19th century. I used the prompt, “What is this document? Transcribe it and help me understand it.”

GPT-4o understood the task and came up with the date and the content with some minor errors. GPT-4o described the note as “The letter is written in early 19th-century English and is addressed to Major Cartwright, who lived at 17 Surrey Street, Blackfriars. The writer expresses regret that they must leave London the following day, preventing them from meeting Major Cartwright as promised.”

Story continues below this ad

(Screengrab: Bijin Jose/The Indian Express)

(Screengrab: Bijin Jose/The Indian Express)

The document I uploaded was an unpublished letter by the famous British poet Lord Byron, which was auctioned in January this year. When asked who wrote the letter, GPT-4o could not directly answer but gave some contextual clues such as the date and historical context, recipient, and content.

“Given these clues, we can speculate that the author might have been someone involved in political reform or who had business or social dealings with Major Cartwright. However, without a clear signature or more specific details about the writer, it is challenging to definitively identify the author of the letter,” read ChatGPT’s response. This feature could prove to be a boon for academicians and researchers.

3. Describe all or any images

If you have been blown away by GPT-4o’s ability to interpret emotions from faces in pictures, this aspect of it is going to impress you greatly. For this, I clicked a picture of the dashboard of the car I was travelling in, the dashboard has the typical infotainment with an array of buttons and lights. I used the prompt – ‘What do you see?’

“The image shows the interior of a car at night. The focus is on the dashboard and the infotainment system, which is displaying music information. The song playing is “Other Side” by Pearl Jam from the album “Lost Dogs”. The infotainment screen shows it’s connected via Bluetooth, and the time is 9:25 PM. Below the screen, there are physical buttons for audio control and climate control settings. The car is at a stop, as indicated by the red traffic light visible through the windshield,” responded GPT-4o.

Story continues below this ad

(Screengrab: Bijin Jose/The Indian Express)

(Screengrab: Bijin Jose/The Indian Express)

The chatbot described the image vividly, so much so that we leaped in excitement. What impressed me was how the chatbot read the traffic light which was at quite a distance.

4. Dietitian on the go

Our next stop was Delhi’s famed Karim’s restaurant. After we ordered our dinner, I went on to click a picture of what was served to us. The image was clicked using the ChatGPT app on my iPhone, and I used the prompt – “What is this, how much calories could it have?”

GPT-4o responded by saying, “The dish in the image appears to be a type of curry, likely a meat curry such as lamb or beef, garnished with julienned ginger and cilantro. The exact calorie content can vary significantly depending on the specific recipe and portion size, but here is a general estimate for a typical meat curry: Calories – Approximately 250-400 calories per serving (1 cup or 240ml); Protein – Around 20-30 grams; Fat – Around 15-25 grams; Carbohydrates – Around 5-15 grams.”

(Screengrab: Bijin Jose/The Indian Express)

(Screengrab: Bijin Jose/The Indian Express)

The food that we ordered was Nalli Nihari, a meat curry that was dripping with oil, ghee, and spices. While the information ChatGPT came up with by analysing the image seemed accurate, the chatbot also gave a caveat – “This estimate can vary based on the type of meat used, the amount of oil or ghee, and any additional ingredients like cream or yoghurt. For a more precise calorie count, you would need the specific recipe and portion size.” Regardless, this can turn out to be a saviour for all health-conscious individuals.

Story continues below this ad

5. A new way to navigate

There are many kinds of maps and navigation apps to help one find their way around. With GPT-4o’s vision capabilities, finding out about locations, and exploring historic monuments, could be fun and educational. It needs to be noted that the results may not always be accurate, specifically since OpenAI maintains that the models are still being trained. I uploaded a random image and asked – “What part of Delhi is this?”

“The photo you shared is of the Jama Masjid in Delhi. It is located in the central part of Old Delhi, near Chandni Chowk. The Jama Masjid is one of the largest and most famous mosques in India, built by Mughal Emperor Shah Jahan,” responded ChatGPT.

(Screengrab: Bijin Jose/The Indian Express)

(Screengrab: Bijin Jose/The Indian Express)

While this was accurate, the first image uploaded came up with an unusual response. A few metres from Jama Masjid, I uploaded a picture of a busy alleyway with shops and food carts and asked – “I think I am lost, what part of Delhi could this be?”

GPT-4o responded by saying, “Based on the image, it looks like you might be in the Paharganj area of Delhi. This neighbourhood is known for its bustling markets, crowded streets, and a mix of shops, restaurants, and budget accommodations. The vibrant nightlife and the variety of neon signs are also characteristic of Paharganj. If you need directions or help navigating, feel free to ask!”

Story continues below this ad

Screenshot

Screenshot

While the text response was impressive, the fact of the matter was that I was barely a few metres away from Jama Masjid from where Paharganj was around 3 kms away. Regardless, this shows the potential of AI chatbots to help you navigate through unknown territories in the future.

6. Translation for travellers

GPT-4o’s vision capabilities not only let the model process and respond to visual inputs, it can also describe or respond to content in uploaded images and screenshots. To try its ability to translate, I uploaded an image containing a cigarette box with Turkish inscriptions. I used the prompt – “Help me understand what is written on this box.”

“The text on the box is in Turkish. Here is the translation – Gebelikte sigara içen annelerin bebekleri, anne karnında yeterince büyüyemez ve doğum sonrası bu bebeklerin hastalık, engellilik ve ölüm riski artar – Babies of mothers who smoke during pregnancy cannot grow adequately in the womb, and after birth, these babies have an increased risk of illness, disability, and death.”

(Screengrab: Bijin Jose/The Indian Express)

(Screengrab: Bijin Jose/The Indian Express)

This feature could be a life saviour to all the solo travellers who may come across situations where they would need to instantly translate signboards. GPT-4o supports over 50 languages and demonstrates considerable advancements in text processing for non-English languages.

Story continues below this ad

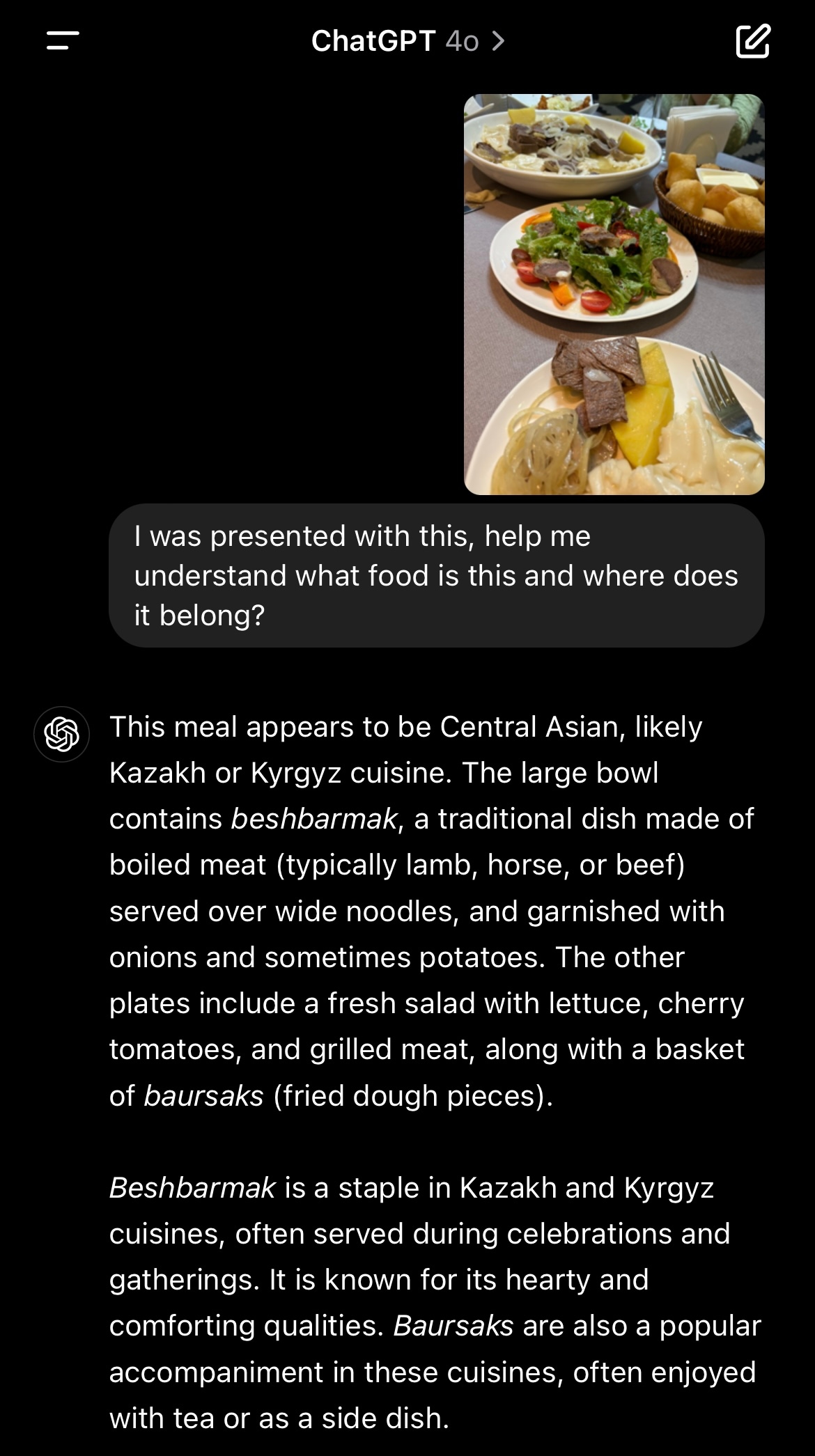

7. Understanding cultural contexts

GPT-4o is capable of analysing almost any kind of picture. To test its contextual awareness, I uploaded an image of a dish that I was presented with on my recent trip to Kazakhstan. I used the prompt – “I was presented with this, help me understand what food this is and where does it belong?”

ChatGPT responded by saying, “This meal appears to be Central Asian, likely Kazakh or Kyrgyz cuisine. The large bowl contains beshbarmak, a traditional dish made of boiled meat (typically lamb, horse, or beef) served over wide noodles, and garnished with onions and sometimes potatoes. The other plates include a fresh salad with lettuce, cherry tomatoes, and grilled meat, along with a basket of baursaks (fried dough pieces).” This information is accurate and this use case can come in handy for educational purposes.

(Screengrab: Bijin Jose/The Indian Express)

(Screengrab: Bijin Jose/The Indian Express)

It needs to be noted that the real-time video analysis that OpenAI showcased on May 13 with GPT-4o is yet to be rolled out. While all the above-mentioned use cases showcase GPT-4o’s remarkable potential, they’re merely the tip of the iceberg.

As OpenAI continues development and users dive deeper, GPT-4o’s capabilities are likely to evolve rapidly. This exploration was conducted using a ChatGPT Plus account, offering a sneak peek into the future of AI-powered interactions.

(Screengrab: Bijin Jose/The Indian Express)

(Screengrab: Bijin Jose/The Indian Express) (Screengrab: Bijin Jose/

(Screengrab: Bijin Jose/ (Screengrab: Bijin Jose/The Indian Express)

(Screengrab: Bijin Jose/The Indian Express) (Screengrab: Bijin Jose/The Indian Express)

(Screengrab: Bijin Jose/The Indian Express) (Screengrab: Bijin Jose/The Indian Express)

(Screengrab: Bijin Jose/The Indian Express) Screenshot

Screenshot (Screengrab: Bijin Jose/The Indian Express)

(Screengrab: Bijin Jose/The Indian Express) (Screengrab: Bijin Jose/The Indian Express)

(Screengrab: Bijin Jose/The Indian Express)