What happens when ChatGPT has to solve a basic math problem? Check out its response

The ability to acknowledge mistakes and correct itself accordingly is one of ChatGPT's defining features, but it's hard to get enough of how good the AI chatbot is at it.

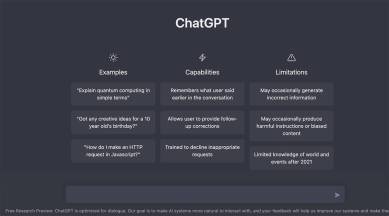

Tell someone from just half a decade ago that five years down the line AI would be able to communicate almost as well as humans and you’d probably prompt surprised faces. But 2022 is in its last leg now and new frighteningly convincing AI chatbots keep coming in. The trend was first set by ChatGPT – the viral AI-powered chatbot capable of creating interaction-style conversation. It’s even got multiple practical use cases already despite its ‘beta’ status.

However, the truth is that ChatGPT is only designed to mimic human styles of conversation and is anything but human in the way it processes things in the background. OpenAI – the bot’s creator – has warned of the bot’s propensity to output misinformation. But because it’s so good at imitating human styles, it’s very easy to take it for its word.

monthly limit of free stories.

with an Express account.

An excellent example of the bot’s riskiness and ability to spread misinformation can be pulled from a recent tweet by Peter Yang, product lead, Reddit.

When Yang presented a very basic math question to ChatGPT, it gave a somewhat convincing answer – only it wasn’t right at all. Anyone who can see past the trickiness of the question will tell you that the correct answer is, in fact, 67. Making matters worse is the fact that the answer is so detailed. You’d probably be less inclined to believe the bot had it responded with a simple “73.”

But while Yang seems to have a negative outlook on the bot as apparent from his remark, ChatGPT has a trick up its sleeve – the ability to admit its mistakes and correct them, which also happens to be one of its defining features.

Another user showed how when they pointed out to the bot that its answer is incorrect, the bot apologised and took another stab at the problem, this time giving the right answer.

It’s also important to reiterate that ChatGPT in its current form, despite its grandeur, is still in its early stages. While GPT-3.5 – the language model that ChatGPT is built on top of – is the third iteration, it’s still got a long way to go. GPT-4, expected to be released in late-2023, is expected to do better on the shortcomings of GPT-3.5 with better performance and better alignment with human commands and values.